Stabilized Annotations for Mobile Remote Assistance

Collaborators: Omid Fakourfar, Kevin Ta, Richard Tang, Scott Bateman, and Anthony Tang

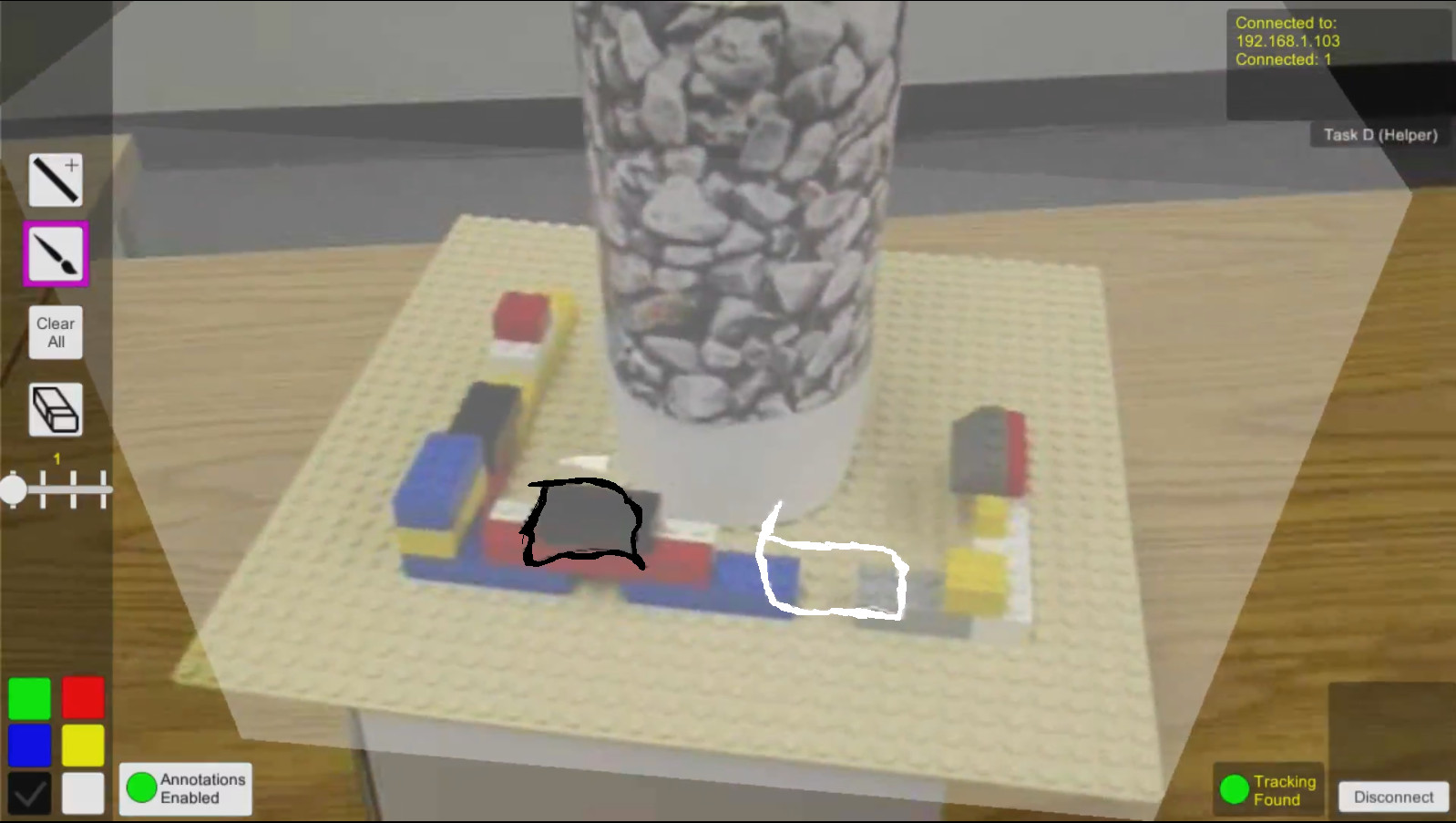

Tools: Unity3D, C#, Vuforia, Movario BT-200

Abstract

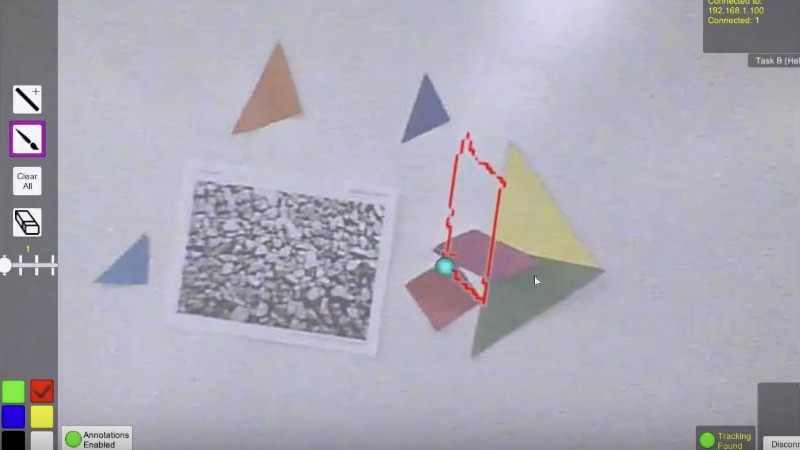

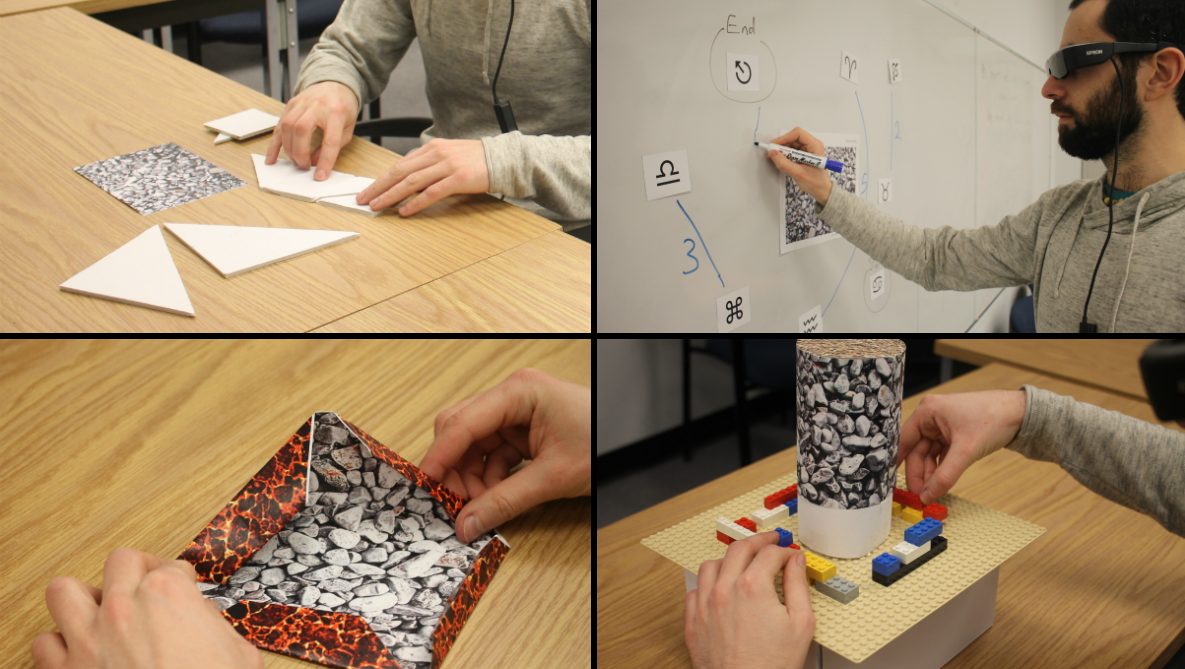

Recent mobile technology has provided new opportunities for creating remote assistance systems. However, mobile support systems present a particular challenge: both the camera and display are held by the user, leading to shaky video. When pointing or drawing annotations, this means that the desired target often moves, causing the gesture to lose its intended meaning. To address this problem, we investigate annotation stabilization techniques, which allow annotations to stick to their intended location. We studied two annotation systems, using three different forms of annotations, with both tablets and head-mounted displays. Our analysis suggests that stabilized annotations and head-mounted displays are only beneficial in certain situations. However, the simplest approach of automatically freezing video while drawing annotations was surprisingly effective in facilitating the completion of remote assistance tasks. Full paper at Conference on Human Factors in Computing Systems (CHI 2016), acceptance rate 23%.

Paper: https://dl.acm.org/citation.cfm?id=2858171

Citation: Omid Fakourfar, Kevin Ta, Richard Tang, Scott Bateman, and Anthony Tang. 2016. Stabilized Annotations for Mobile Remote Assistance. In Proceedings of the 2016 CHI Conference on Human Factors in Computing Systems (CHI ‘16). ACM, New York, NY, USA, 1548-1560. DOI: https://doi.org/10.1145/2858036.2858171