Bod-IDE: An Augmented Reality Sandbox for eFashion Garments

Supervisors: Lora Oehlberg, Ehud Sharlin

Tools: Unity3D, C#, Kinect v2, OpenCV, Node.js, Javascript, Phidgets, RFID

Abstract

Poster Presented at DIS 2018, Hong Kong.

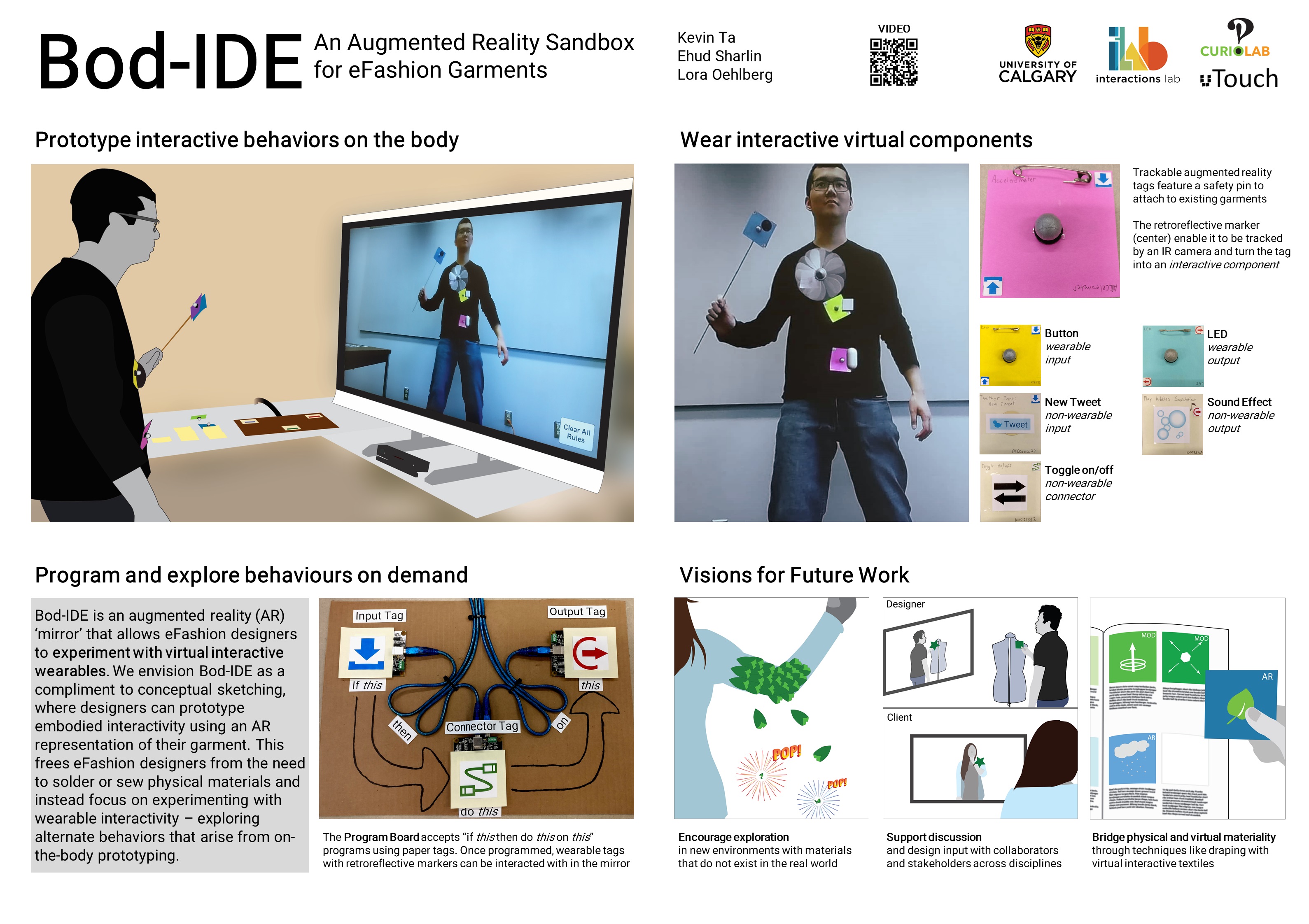

Electronic fashion (eFashion) garments use technology to augment the human body with wearable interaction. In developing ideas, eFashion designers need to prototype the role and behavior of the interactive garment in context; however, current wearable prototyping toolkits require semi-permanent construction with physical materials that cannot easily be altered. We present Bod-IDE, an augmented reality ‘mirror’ that allows eFashion designers to create virtual interactive garment prototypes. Designers can quickly build, refine, and test on-the-body interactions without the need to connect or program electronics. By envisioning interaction with the body in mind, eFashion designers can focus more on reimagining the relationship between bodies, clothing, and technology.

**Citation: ** Kevin Ta, Ehud Sharlin, and Lora Oehlberg. 2018. Bod-IDE: An Augmented Reality Sandbox for eFashion Garments. In Proceedings of the 19th International ACM SIGACCESS Conference on Computers and Accessibility (DIS ‘18). ACM, New York, NY, USA, 33-37. DOI: https://doi.org/10.1145/3197391.3205408

A Usage Scenario

**(a) Virtual cloth tag fluttering in the wind when activated by (b) a button.**

**(a) Virtual cloth tag fluttering in the wind when activated by (b) a button.**

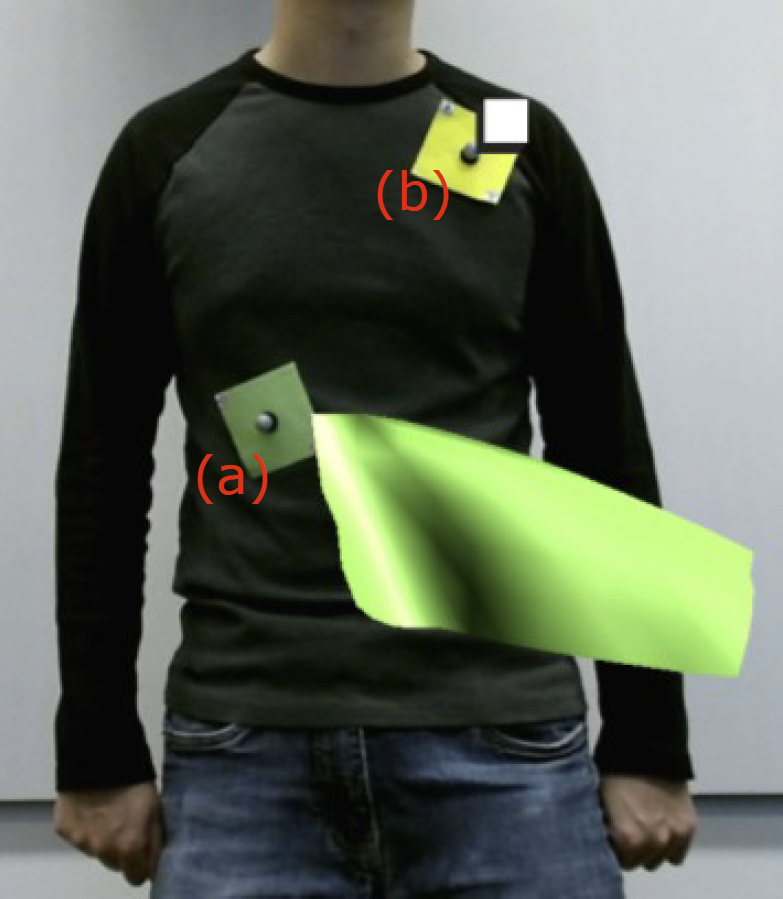

Katie, an eFashion designer, wants to create a steam punk styled jacket that represents her client Sasha’s Twitter feed activity. Katie creates concept sketches that capture the aesthetic and basic behavior of the jacket. With her vision in mind, she uses her augmented reality mirror (Bod-IDE) starting with the Twitter feed module. She creates one of her jacket’s envisioned behaviors – animating the jacket on a retweet – using the program board (Figure 5) to link the Twitter tag to various output components that could communicate ‘aliveness’, settling on LEDs and fans. She physically attaches the tags on top of an existing jacket that she plans to modify for the project using safety pins with the LED tags placed on the side of her shoulders and fans along the inside of her forearms…

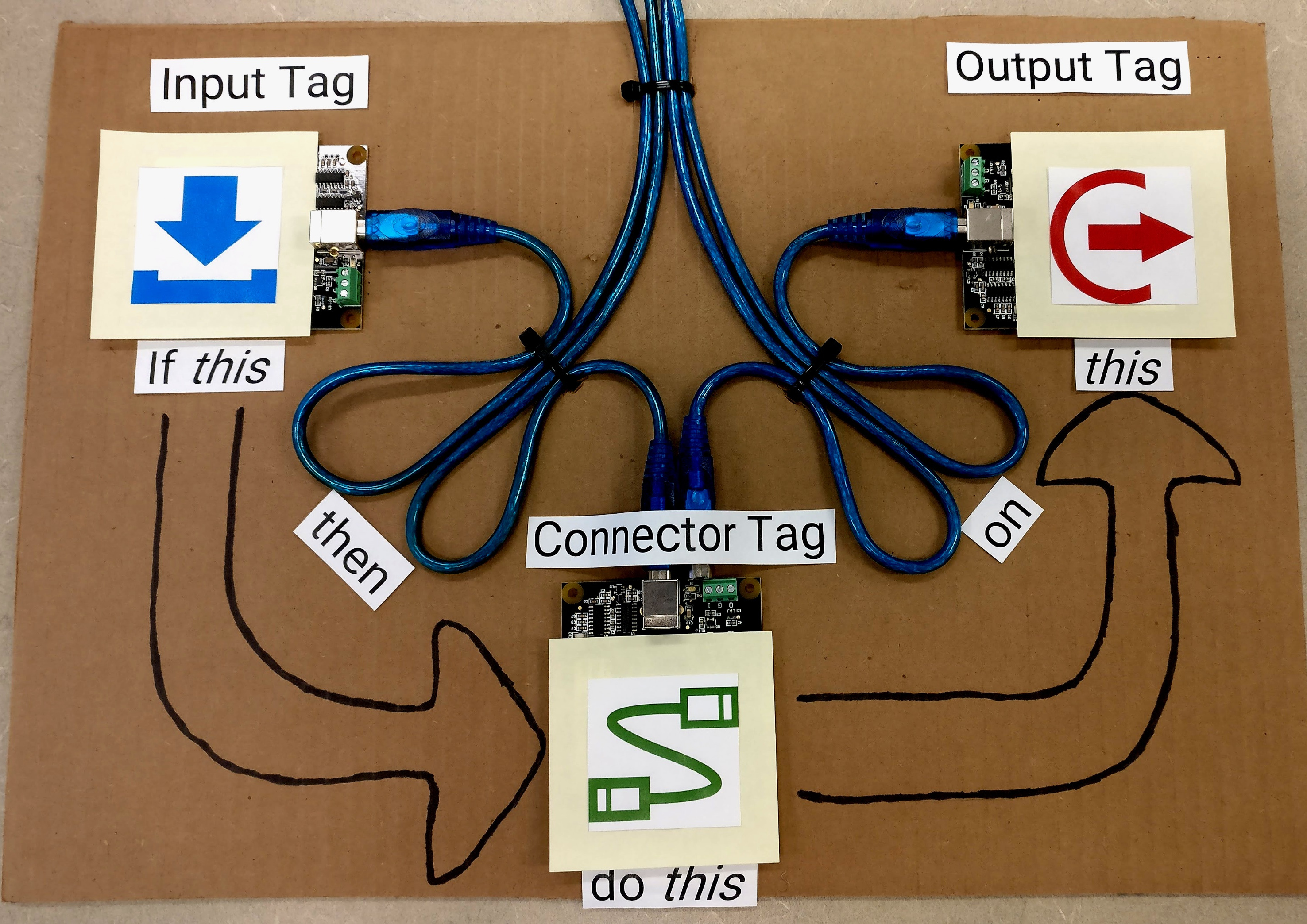

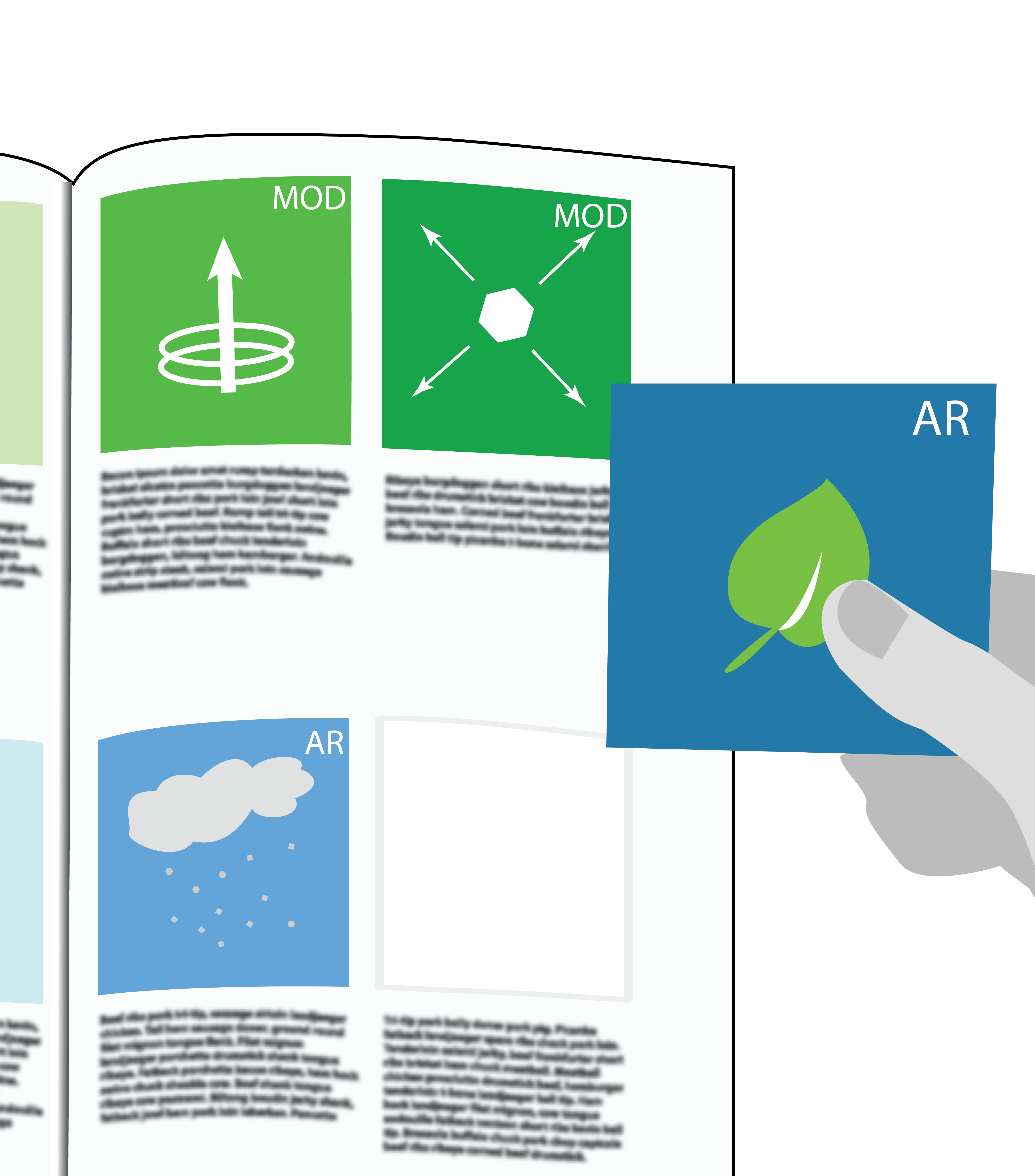

**The program board using Phidget RFID readers to register program behaviors. Designers place tags on top of the bins (icons).**

**The program board using Phidget RFID readers to register program behaviors. Designers place tags on top of the bins (icons).**

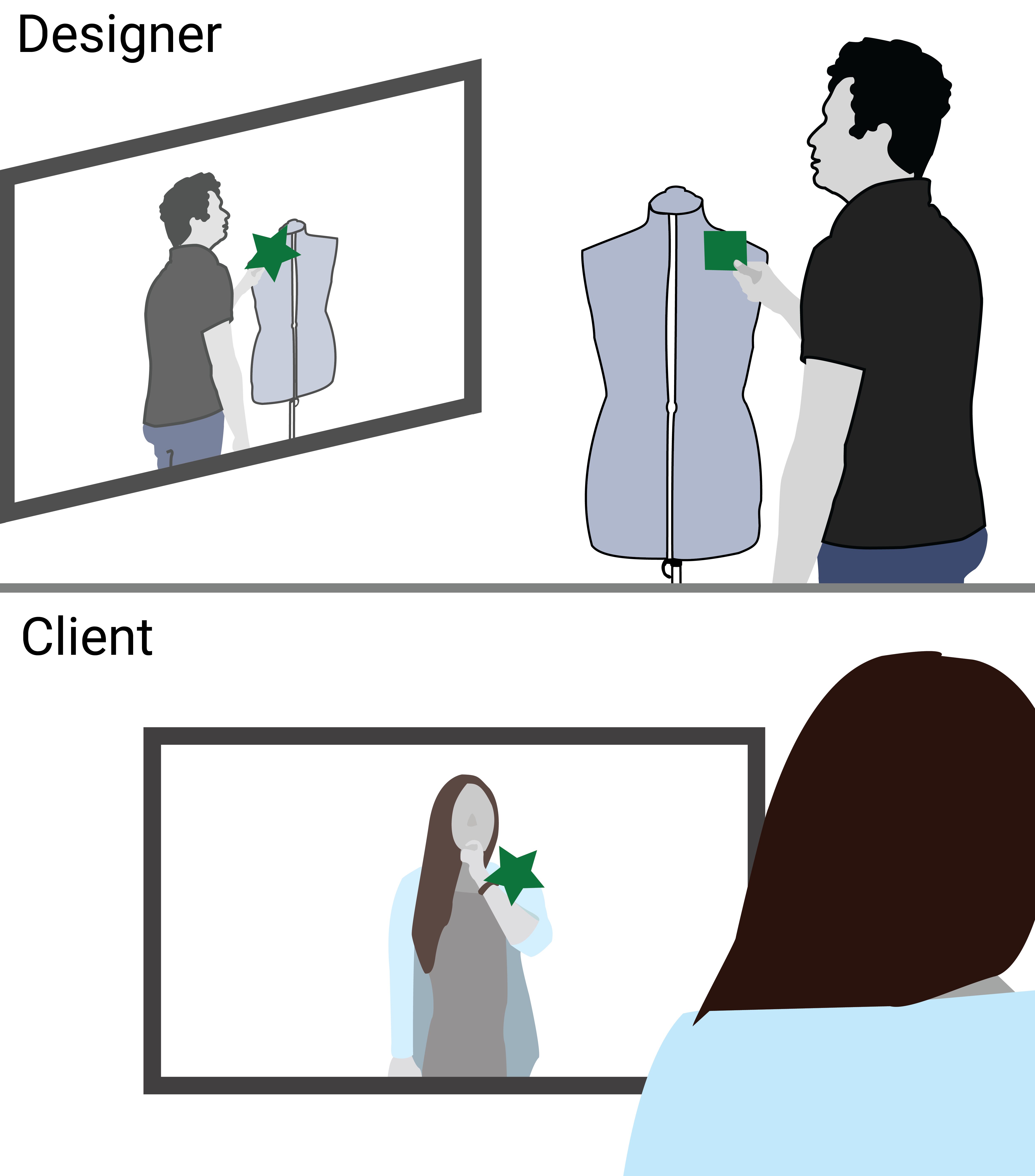

To test this implementation, she wears the jacket and sits on a chair in front of a coffee table to simulate a café space. The mirror animates the tags with virtual components by blinking the LED on every retweet. She notices that the LEDs flickers too often because Sasha’s Twitter feed is popular and that it would draw too much public attention. She taps alternative Connector Tags on the program board to explore different mappings between the Twitter tag and the LED’s, settling on a Connector Tag that blinks the LED after a certain threshold and tries again in front of the mirror. After some refinement, she invites her client Sasha to try on the jacket in the mirror. Sasha mentions that she would like the fans to the side of her arms since it had a clockwork automaton aesthetic. Katie quickly swaps the locations of the LED’s and fans. Katie eagerly asks Sasha to try her jacket again, as they explore more interactive behaviors together.

Future Work

Encouraging Exploration. Designs can explore impractical materials like limitless exploding scales with comic book "POP!" effects. These imaginary materials can help reveal alternative ways to express a message rather than how.

Encouraging Exploration. Designs can explore impractical materials like limitless exploding scales with comic book "POP!" effects. These imaginary materials can help reveal alternative ways to express a message rather than how. Support Discussion. Design input can be shared across collaborators, stakeholders, and disciplines remotely.

Support Discussion. Design input can be shared across collaborators, stakeholders, and disciplines remotely. Bridging virtual and physical materiality. A future version of Bod-IDE could use both virtual and physical materials. A swatchbook could help facilitate the use of physical materials with virtual materials as well.

Bridging virtual and physical materiality. A future version of Bod-IDE could use both virtual and physical materials. A swatchbook could help facilitate the use of physical materials with virtual materials as well.