AR Stabilized 3D Hand Gestures

Tools: Unity3D, C#, Google Cardboard, Vuforia, Intel Realsense

Supervisor: Anthony Tang

Abstract

Modern video conferencing tools can be used for remote collaboration, but causes confusion when gestures are used in remote-expert scenarios. Experts often use hand gestures as shortcuts to describe what they mean; however, because the cameras do not capture these gestures in their intended context, communication is then focused on disambiguation rather than the task. Prior work has often captured either the workspace or gesture in 2D which is only particularly suited for tasks on a flat plane. However these systems cannot capture or present gestures in a meaningful way when objects become occluded by depth. Real world tasks are often three dimensional which requires users to take on different perspectives and make gestures with depth. I built a prototype system to investigate the use of making and perceiving hand gestures in stereoscopic 3D for remote-expert scenarios. In this approach users can make gestures that can communicate position and scale within a 3D space. In addition, users can work at any angle to the workspace, moving around as necessary to overcome occlusion. A pilot study was conducted to determine design factors that affect user behavior through 3D stereoscopic capture and display of hand gestures in similar real world tasks. My findings suggests that future work should focus on improving the prototype’s fidelity and examining a wider variety of gestures under different rendering scenarios.

**Paper: **Download PDF

Poster: Download Poster

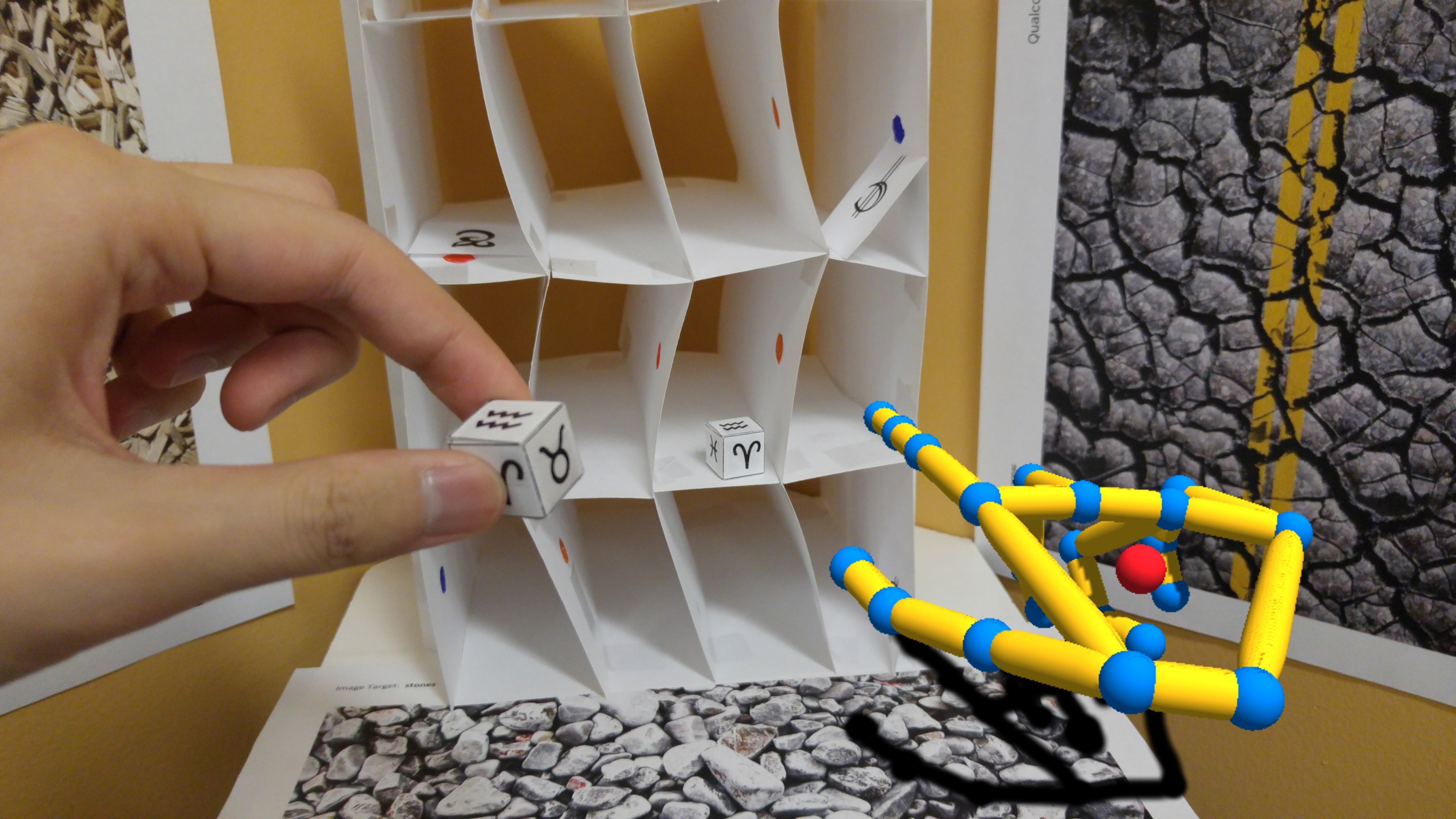

** A virtual hand (helper) pointing to the location where they should place the object. **

** A virtual hand (helper) pointing to the location where they should place the object. **

Collaboration in Augmented Reality

**A schematic of the remote collaboration scenario. A worker (left) is requesting assistance from a helper (right). A worker uses a Head Mounted Display (Google Cardboard) and a Depth Camera (Intel RealSense). They both work together on the worker's problem object. An AR tag is used to localize the hands to the environment.**

**A schematic of the remote collaboration scenario. A worker (left) is requesting assistance from a helper (right). A worker uses a Head Mounted Display (Google Cardboard) and a Depth Camera (Intel RealSense). They both work together on the worker's problem object. An AR tag is used to localize the hands to the environment.**

**The same schematic in real life. The helper uses hand gestures (appearing as virtual hands) which are then replicated and overlaid in the Worker's display. The AR tags in the scene help ground the to the world as opposed to a traditional drawn-on-screen annotation.**

**The same schematic in real life. The helper uses hand gestures (appearing as virtual hands) which are then replicated and overlaid in the Worker's display. The AR tags in the scene help ground the to the world as opposed to a traditional drawn-on-screen annotation.**