Robot Improv Puppet Theatre

Collaborators: Claire Mikalauskas, Tiffany Wun, Kevin Ta, Joshua Horacsek, Lora Oehlberg

Tools: Node.js, Socket.io, Javascript, Python, HTML, Arduino, C++

Abstract

In improvisational theatre (improv), actors perform unscripted scenes together, collectively creating a narrative. Audience suggestions introduce randomness and build audience engagement, but can be challenging to mediate at scale. We present Robot Improv Puppet Theatre (RIPT), which includes a performance robot (Pokey) who performs gestures and dialogue in short-form improv scenes based on audience input from a mobile interface. We evaluated RIPT in several initial informal performances, and in a rehearsal with seven professional improvisers. The improvisers noted how audience prompts can have a big impact on the scene - highlighting the delicate balance between ambiguity and constraints in improv. The open structure of RIPT performances allows for multiple interpretations of how to perform with Pokey, including one-on-one conversations or multi-performer scenes. While Pokey lacks key qualities of a good improviser, improvisers found his serendipitous dialogue and gestures particularly rewarding.

Project originally competed in the UIST 2017 Student Innovation Contest.

Paper: Claire Mikalauskas, Tiffany Wun, Kevin Ta, Joshua Horacsek, and Lora Oehlberg. 2018. Improvising with an Audience-Controlled Robot Performer. In Proceedings of the 2018 on Designing Interactive Systems Conference 2018 (DIS ‘18). ACM, New York, NY, USA, 657-666. DOI: https://doi.org/10.1145/3196709.3196757

Demo: Tiffany Wun, Claire Mikalauskas, Kevin Ta, Joshua Horacsek, and Lora Oehlberg. 2018. RIPT: Improvising with an Audience-Sourced Performance Robot. In Proceedings of the 19th International ACM SIGACCESS Conference on Computers and Accessibility (DIS ‘18). ACM, New York, NY, USA, 323-326. DOI: https://doi.org/10.1145/3197391.3205397

Web Interface

**The RIPT web interface.**

Audience members access the crowdsourcing interface by visiting a web URL on their mobile devices before the start of the show. The mobile interface prompts the audience for dialogue using one of three prompts from the selected prompt set. Dialogue entries need to be between 3-35 words and must be paired with a selected gesture. We created prompts that encourage a common character perspective with audience input for a show. The prompts come in sets of three, where each set is a show. The interface also asks the audience to select one of three gestures to go with their line of dialogue; the three options are selected at random from the full list of available gestures. The set of prompts and available gestures are chosen by a behind-the-scenes director using the backstage controller.

**The RIPT web interface.**

Audience members access the crowdsourcing interface by visiting a web URL on their mobile devices before the start of the show. The mobile interface prompts the audience for dialogue using one of three prompts from the selected prompt set. Dialogue entries need to be between 3-35 words and must be paired with a selected gesture. We created prompts that encourage a common character perspective with audience input for a show. The prompts come in sets of three, where each set is a show. The interface also asks the audience to select one of three gestures to go with their line of dialogue; the three options are selected at random from the full list of available gestures. The set of prompts and available gestures are chosen by a behind-the-scenes director using the backstage controller.

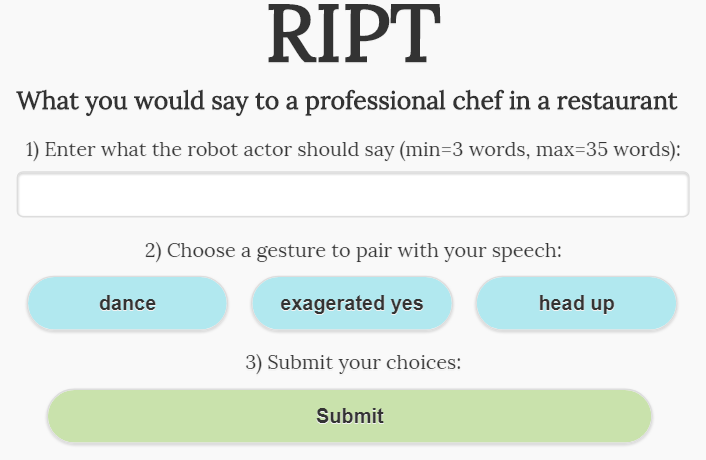

** Audience Mobile Interface. One random prompt from a chosen set of prompts is presented per submission. The audience member supplies a line of dialogue paired with one of the three randomly presented gestures. **

** Audience Mobile Interface. One random prompt from a chosen set of prompts is presented per submission. The audience member supplies a line of dialogue paired with one of the three randomly presented gestures. **

Backstage Controller

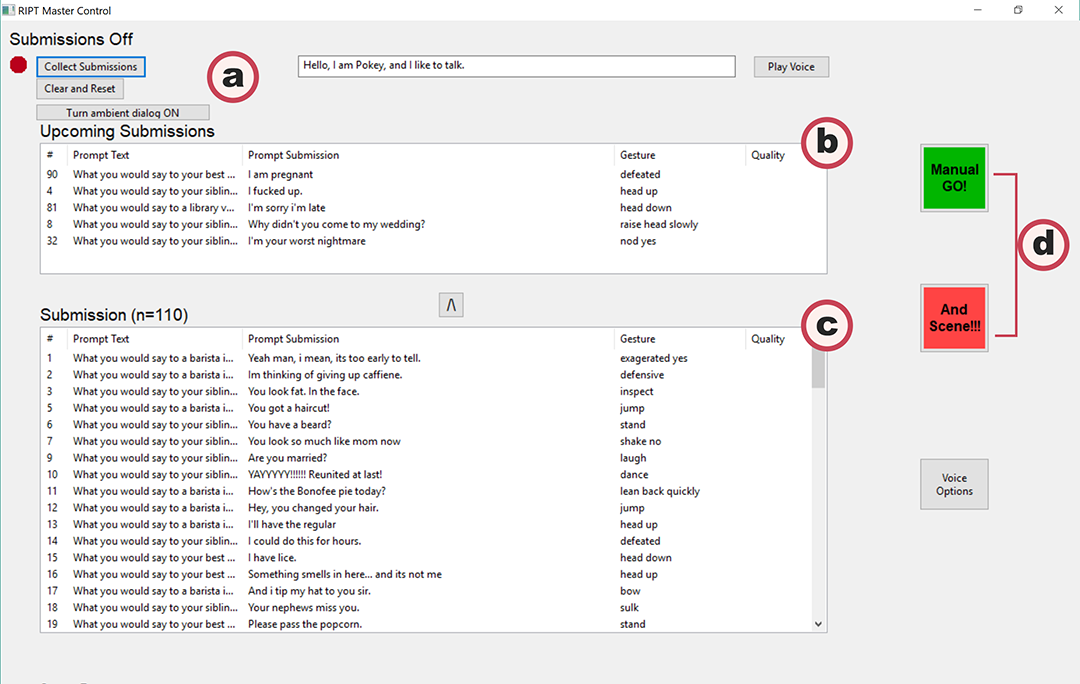

**Backstage controller interface includes: (a)controls to initiate submission collection; (b) queue of next five submissions, to be used on upcoming GO button presses, chosen randomly from the collection; (c) the full list of submissions; and (d) manual controls for hardware buttons. **

**Backstage controller interface includes: (a)controls to initiate submission collection; (b) queue of next five submissions, to be used on upcoming GO button presses, chosen randomly from the collection; (c) the full list of submissions; and (d) manual controls for hardware buttons. **

The backstage controller allows a stage director to initiate a show, and collects submissions for Pokey. While the director can remove inappropriate submissions, they cannot use the backstage controller to add submissions or control the ordering of those submissions. Once a submission is removed or played through Pokey, it can no longer be played again. To collect submissions, the director selects a set of prompts and gestures to be displayed on the mobile interface. A web-server then opens the mobile interface for submissions from the audience. Submissions are automatically saved to a file and can be reloaded for later. Once there is a satisfactory number of submissions, the director can close the submissions and begin the show. The backstage controller and the mobile interface connect to each other via a proxy server. Each mobile interface connects to a server, which manages all mobile interface connections and relays submissions to and from the backstage controller. The backstage controller receives submissions and updates the server (therefore the mobile interface) with prompt sets, gestures, and submission collection status. The mobile interface was written in HTML5 and JavaScript, the server with Node.js and Socket.io, and the backstage controller with Python.

Animation Rig

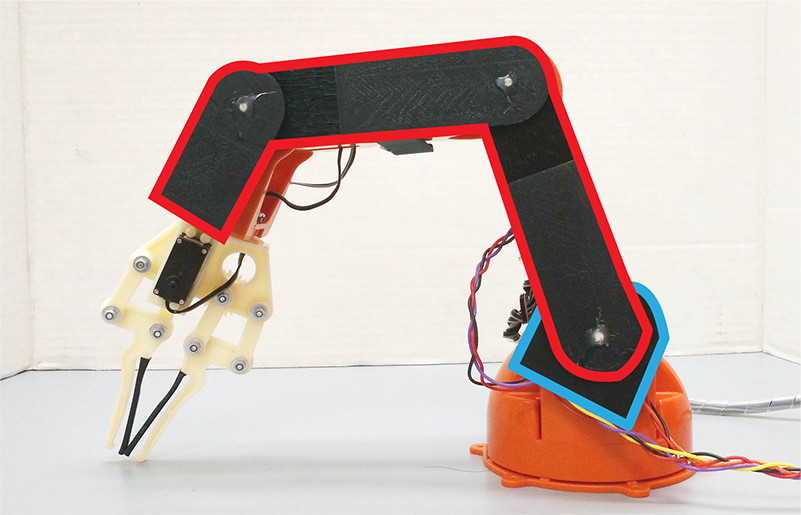

**Animation rig. Armature is outlined in red and base piece outlined in blue. Each joint uses a potentiometer to measure angles of the joint.**

RIPT as a system facilitates the performance of dialogue and gesture by an Arduino Braccio Robot Arm ("Pokey"). First, we defined a series of gestures for Pokey to perform. To record gestures, we designed a custom animation rig that temporarily attaches to the exterior of the Braccio robot arm. The rig includes three lengths of 3D printed armatures, and one base piece. Each joint of the animation rig has a potentiometer that adjusts its value according to the joint angle; as the animator moves the robot manually, the rig produces an analog value corresponding to the angle of each joint as a keyframe. Very few of our planned gestures used the yaw rotation about the base of the Braccio; thus, we did not design a jig to capture the yaw rotation of the base. Instead, gestures with yaw base rotation had each yaw angle manually defined for multiple keyframes.

**Animation rig. Armature is outlined in red and base piece outlined in blue. Each joint uses a potentiometer to measure angles of the joint.**

RIPT as a system facilitates the performance of dialogue and gesture by an Arduino Braccio Robot Arm ("Pokey"). First, we defined a series of gestures for Pokey to perform. To record gestures, we designed a custom animation rig that temporarily attaches to the exterior of the Braccio robot arm. The rig includes three lengths of 3D printed armatures, and one base piece. Each joint of the animation rig has a potentiometer that adjusts its value according to the joint angle; as the animator moves the robot manually, the rig produces an analog value corresponding to the angle of each joint as a keyframe. Very few of our planned gestures used the yaw rotation about the base of the Braccio; thus, we did not design a jig to capture the yaw rotation of the base. Instead, gestures with yaw base rotation had each yaw angle manually defined for multiple keyframes.